Researchers have uncovered a major threat to the world of AI Models

June 6, 2024

Topics

- AI

- AI Models

June 6, 2024

Topics

Recently this year, cybersecurity researchers at Wiz Research have found a significant security flaw in AI service provider, Replicate, that could allow hackers to gain its users' sensitive private information and AI models. This breakthrough was found through a coding error in an open-source tool, named Cog that Replicate utilizes.

Replicate is an internet platform that provides its users, typically comprised of software engineers and big businesses, the crucial steps needed to implement and expand AI through the thousands of AI models and AI generation programs it offers. Replicate’s AI models however are packaged in formats that allow hackers to perform code execution via, the creation of malicious AI models to attack Replicate’s user base and obtain possession of their information.

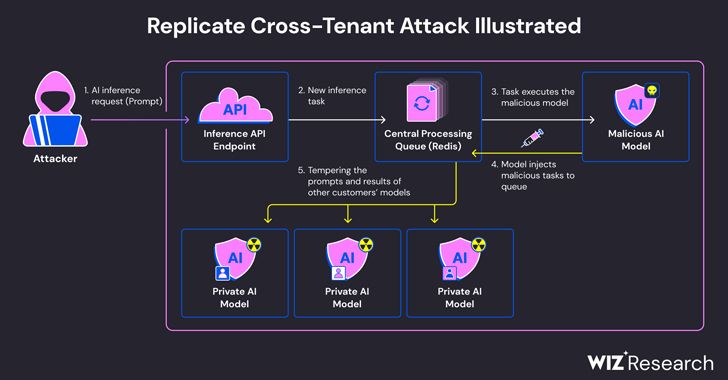

The code execution can be configured through Replicate’s AI models and works through, the manipulation of Cog’s model storage format. After containerizing a model using Cog, users can upload the resulting image to Replicate's platform and start interacting with it. Hackers can upload malicious data via the images contained within a model using the Cog platform and can interact, control, and share such data within Replicate’s AI model storage database. This code execution flaw is believed to have originated from AI companies running their AI models from untrusted sources.

Hackers could potentially perform this dangerous code execution through Cog, an open-source tool program on Replicate that allows users to package their AI models in an available data container. Cog exists to provide users with a consistent environment that runs their AI models, shares them with other users, and deploys them into HTTP services. Cog also has many more functions and utilizations such as automatic docker image and automatic HTTP service.

To test the theory of this potential security threat researchers at Wiz Research experimented with Cog on Replicate and another AI service provider called HuggingFace, a data science corporation and platform that helps users to build develop, and store AI models. Wiz Researchers created a malicious Cog container and uploaded it on both platform’s data networks. After a long process, the researchers were successful in their experiment and, conducted a cross-tenant attack via an exploitation technique of the malicious cog container they uploaded that allowed them to question other models and modify the models' output to their desire. With the results for both platforms coming back successful through the usage of specific code execution patterns, Wiz researchers were able to upload the malicious AI model to both platforms’ AI networks digging so deep into both networks that they could completely alter the Ai models' functions to their design.

Currently, there is no surefire method to prevent this danger from occurring to users making this discovery highly important. The best way for users of these platforms to defend themselves is by using verified safe and secure formats such as safetensors by HuggingFace to develop AI models and for cloud providers to enforce tenant isolation methods to prevent potential hackers from accessing a wide range of user data.

The results of this experiment prove that potential hackers can exploit the Cog tool within these AI providers. Hackers can obtain and expose sensitive information and drastically alter the reliability and accuracy of these AI models. Replicate has addressed this flaw to its users to spread awareness of this potential danger. This discovery has shed even more light on the dangers of AI to people’s private data and information and, the best way to protect yourself is through awareness and caution as with all online and AI technology.